Posts

EKS microservices with full encryption in transit

Posted on July 18, 2024 • 9 min read • 1,787 words

By Alex Richardson (Director of Engineering) & Ravi Ramanuj (Lead DevSecOps)

Setting the scene

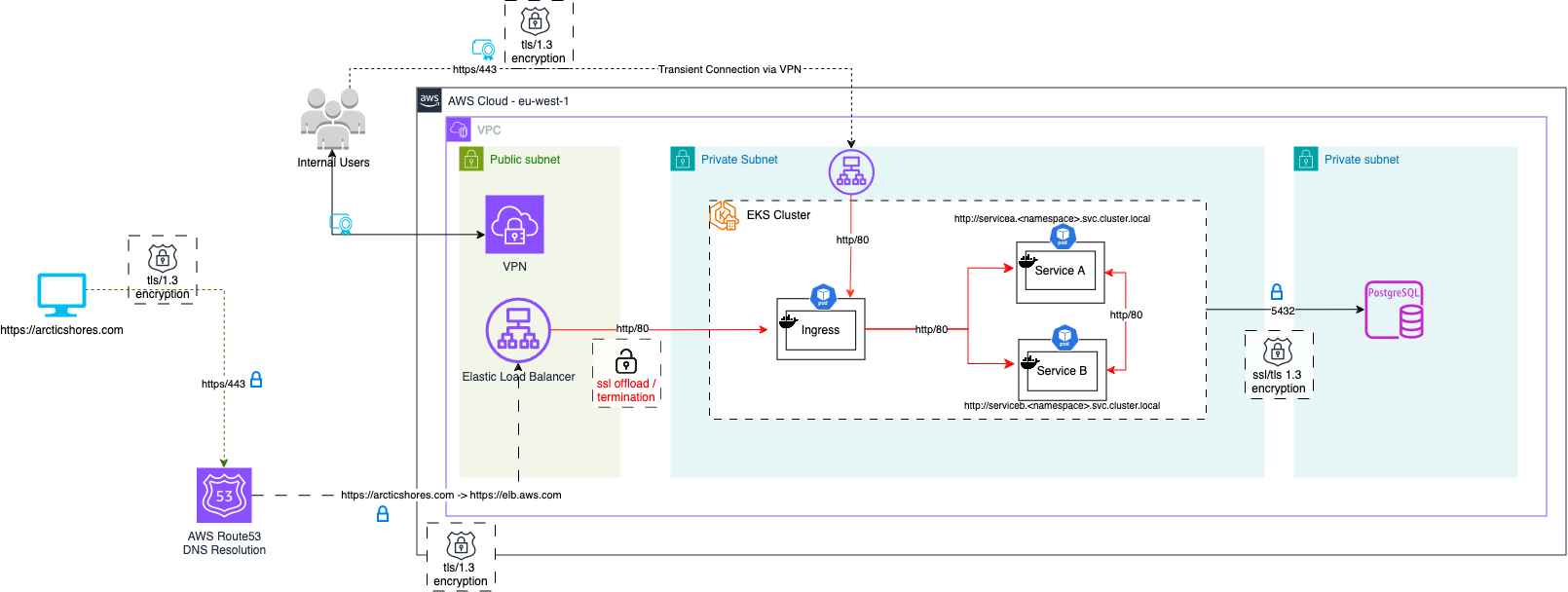

At Arctic Shores we take our data and infrastructure security very seriously, we store client as well as candidate data so we have a responsibility to protect that data. Until recently our infrastructure was a classic Public/Private network with our load balancer sitting in the public network and our kubernetes cluster sitting protected in our private cluster (with our database separated again in private VPCs).

While this pattern has served us well (and is widely used) it has the issue that traffic is only encrypted up to the load balancer, all traffic after that and into the cluster is open. With a classic on-prem approach thats an acceptable risk since you own the networks, and you know that communication within a subnet doesn’t leave your data centre. However, in a cloud world a subnet is only a virtual concept, two parts of a subnet may live across different parts of a data centre and may need to pass through several routers to hit their destination, all in plain text. Data moving between AZs then needs to leave the AWS data center itself (though Amazon assure us that all traffic is bulk encrypted when it does this).

So while the risk here is small (that of a router within AWS being compromised) it was still an annoyance to us, and somewhere we felt we could do better.

Therefore, we began work to narrow down and encrypt absolutely all HTTP traffic within UNA

All traffic types and ingress

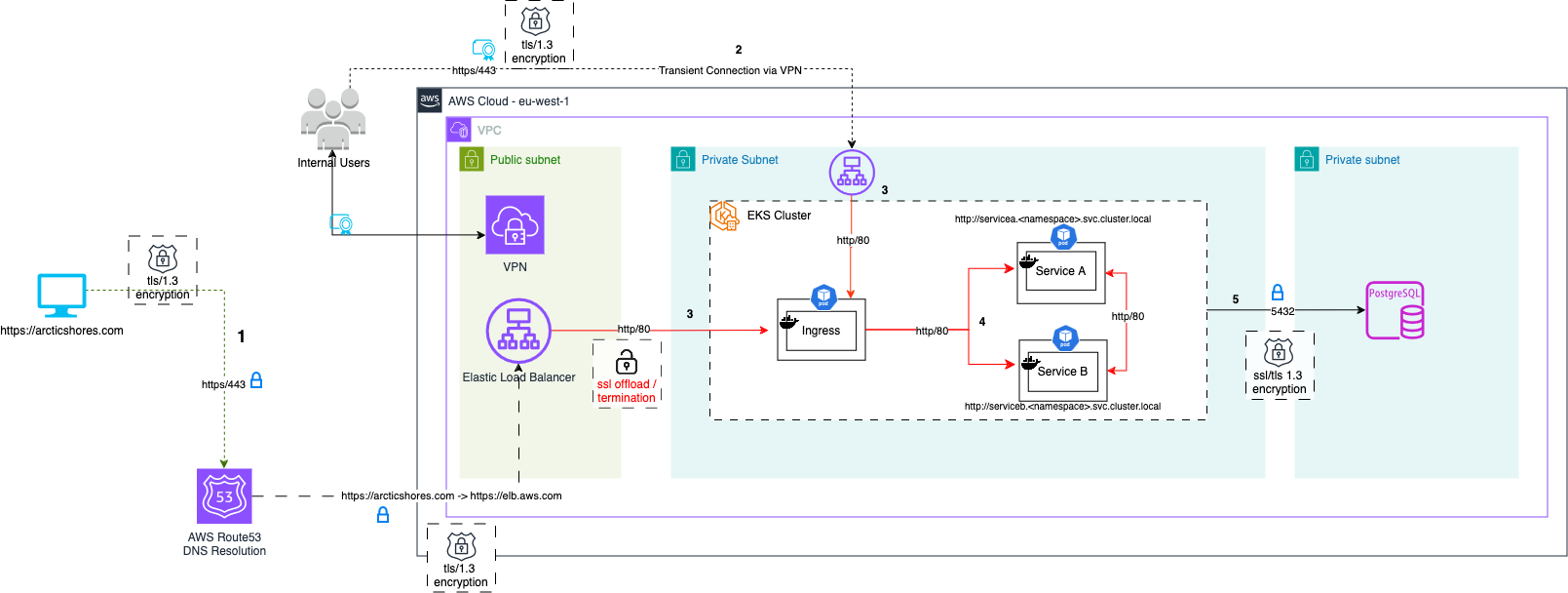

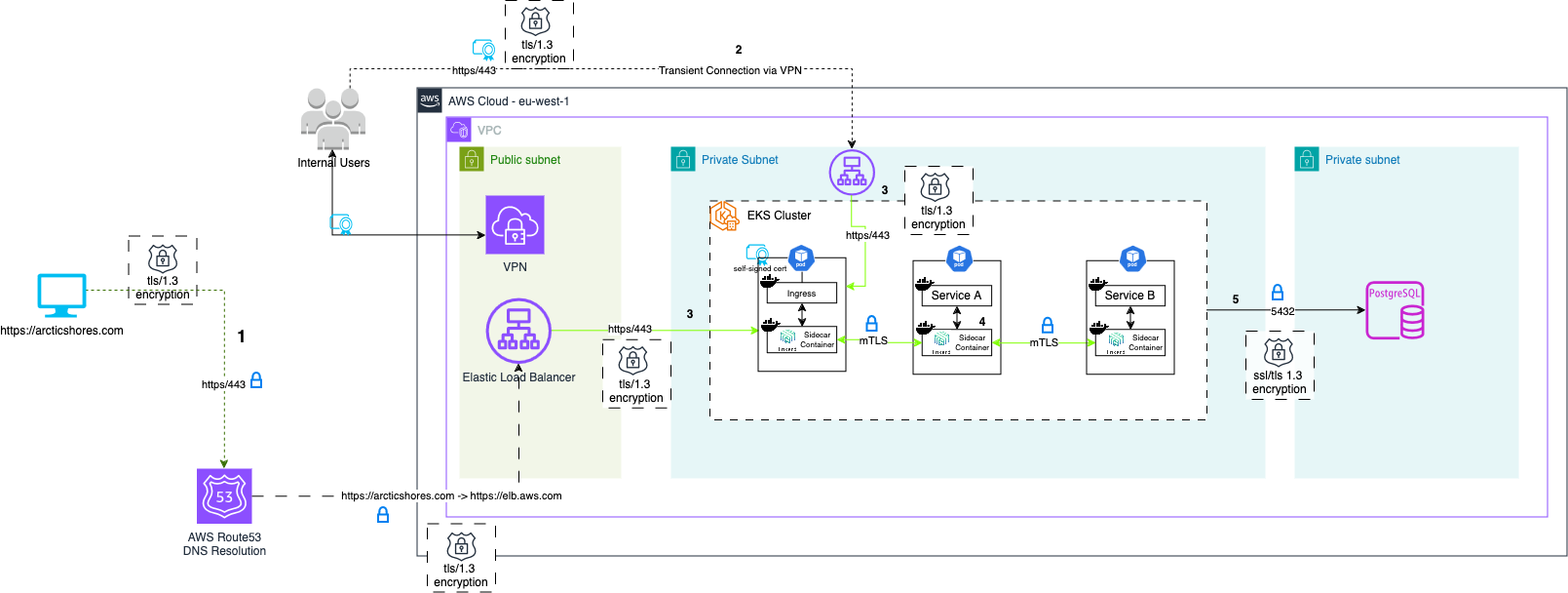

As you can see from the diagram we have five forms of network traffic to handle:

- Traffic in to our public ALB (all candidate and customer facing UNA traffic)

- Traffic to our private ALB via our VPN (developer access and internal tooling)

- Traffic from each load balancer to the cluster

- Traffic within the cluster

- Traffic to our databases

From the off you can see the most important parts were well encrypted, all public traffic to either load balancer is encrypted requiring TLS 1.3.

Traffic to our postgres instances is https over port 5432, and data from our Dynamo instances is via the AWS SDK which encrypts all traffic.

That leaves us with two main problems to tackle:

- Service to service communication within our kubernetes cluster

- Ingress to the cluster from our load balancers

Encrypting the cluster

Why bother encrypting traffic in a private EKS cluster?

Depending on the type of data your application handles you need to go above and beyond to ensure your environment is bulletproof. If your application handles healthcare information (HIPAA) or payment card data (PCI DSS) you may be subject to regulations that mandate that data integrity be preserved during transmission. This often requires end-to-end encryption, ensuring that data remains encrypted from the client to the destination without any point of decryption.

Encrypting your private cluster is also a crucial step into achieving a Zero Trust environment (something we’ll revisit later). In a Zero Trust security model you assume that EVERYTHING is compromised, and you operate on the premise that your private network is just as vulnerable and susceptible to attacks as the public network.

The environments should both have mirrored hardening, firewalling and of course, encryption.

mTLS vs TLS

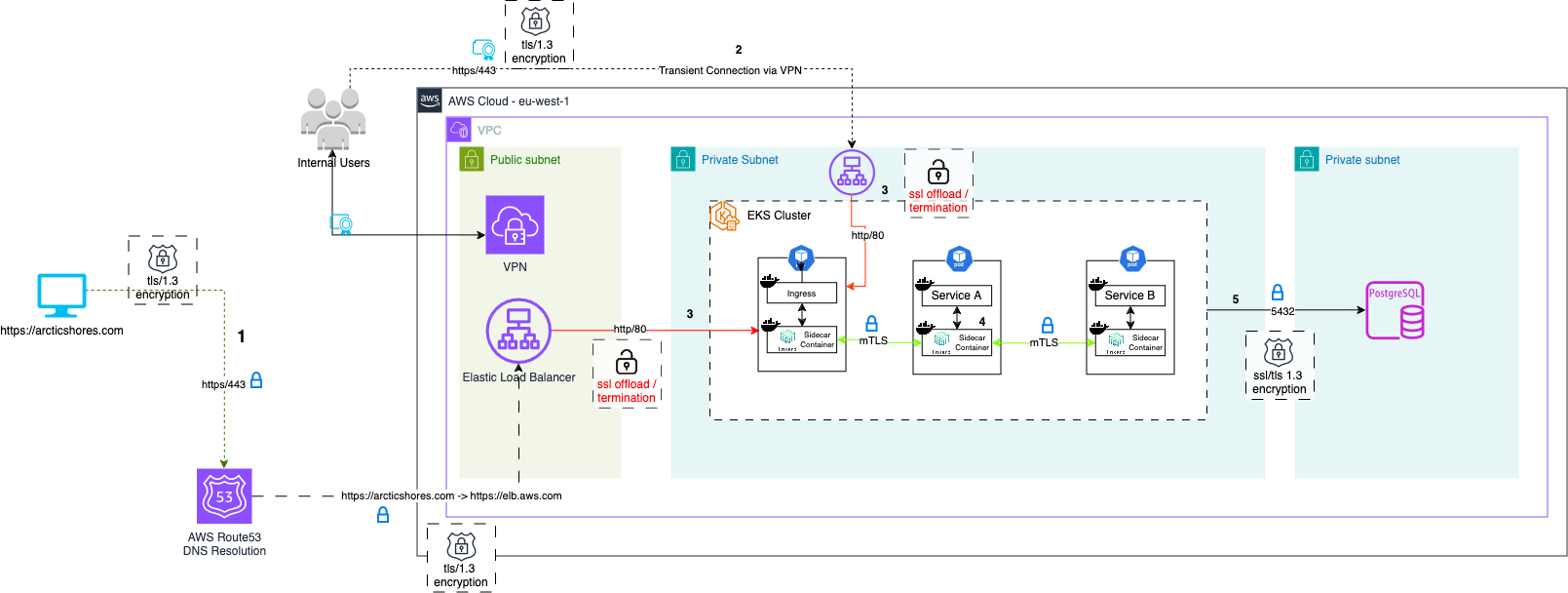

Looking at connection 3 on the diagram, we switch from TLS (which HTTPS is served over) from the client to the public & private load balancers to mTLS within the private EKS cluster.

Just like TLS, mTLS encrypts in-transit traffic, protecting it from interception, eavesdropping, and tampering, thereby maintaining data integrity.

However, unlike TLS which provides one-way authentication (server -> client), mTLS provides mutual authentication (hence the m). mTLS verifies both the identities of the communicating parties, ensuring both sides are who they claim to be thereby giving us a greater degree of security and control.

Using certificates for authentication and authorization, it enforces which services are allowed to communicate, effectively allowing us to implement RBAC at the service level.

mTLS Integration with Service Mesh

Implementing mTLS in your cluster is straightforward since it ships out of the box with a Service Mesh like Istio, Kong, or Linkerd, all of which are free and open source.

A Service Mesh is an incredibly powerful tool for your cluster, and while this blog won’t cover all the benefits in detail, they provide features beyond mTLS, such as API rate limiting, circuit breakers, and advanced service-level observability.

It’s worth experimenting with different Service Meshes to determine which one works best for your application.

At Arctic Shores, we landed on using Linkerd within our cluster due to its simplicity and lightweight nature. We liked how easy it was to set up and manage, requiring minimal configuration to get started. Whilst Linkerd’s simplicity proved advantageous for us, it does come at the cost of fewer features compared to other service meshes like Istio. It’s worth having a shop around and deciding which Service Mesh works best for your application.

TLS & mTLS in Action

Starting from connection 1 on the diagram, you’ll see that our external users access the ALB sitting in the public subnet where data is encrypted in transit using HTTPS/TLS 1.3.

Once the data from the user reaches the load balancer, TLS is terminated (TLS offload) which means its decrypted on the load balancer.

The decrypted, plain-text data (connection 3) is then sent to the EKS Nginx Ingress Controller. At this point, mTLS takes over to handle encryption within the cluster via the Linkerd Sidecar Container (connection 4).

When Linkerd is enabled on a cluster, each EKS pod in a given namespace is injected with a sidecar container proxy that intercepts traffic destined for the primary container.

The Service Mesh, configured with certificates, handles traffic decryption before it reaches the application container and re-encrypts it on the way out. This process validates incoming traffic and can deny unauthorized traffic.

However, a system is only as secure as its weakest part, and you can still see the unencrypted flow of data from the load balancer to the cluster (over http) after the TLS offload.

Encrypting the last mile

Returning to the zero trust model - if we were to assume that our private network was public - the same rationale that prompted us to implement mTLS on the private cluster should assert us to encrypt the ’last mile’ thereby removing the SSL offload altogether, afterall, we would not be comfortable sending plain-text over the internet.

This then begs the question, why did we offload in the first place?

TLS Offload

TLS/SSL Offloading refers to the point in your network architecture with which the encrypted traffic is decrypted and effectively becomes plain text which is transferred between the offloading device (in our case the load balancer) and consumed by the servers.

Offloading ssl at some point in your architecture is mainly done to reduce load on your backend servers since they don’t need to worry about handling intensive crytophologic calculations and can focus purely on the business logic, it’s a really a performance/security tradeoff, and as we discussed earlier, was more common within legacy, on-prem 3 tier architectures where the security risk was small since all the systems were known and controlled.

The cloud introduces a new plethora of risks, making the security implications of TLS offloading no longer trivial. It is increasingly common for security experts and auditors to recommend foregoing TLS offloading alltogether.

Further, the same end-to-end encryption requirements mandated for regulatory data integrity also apply here. SSL offloading involves decrypting data at the load balancer/ proxy server which could potentially violate these requirements - always err on the side of caution when handling sensitive data, don’t let this be the chink in your armour!

The Solution

This was an area in our research that we found surprisingly little information on, service meshes are well established but without full end to end encryption, then you are not fully encrypted. We were able to easily solve this with a curious quirk of ALBs: they don’t verify the certificate they receive. This, combined with the fact that all our LB traffic first hits our nginx ingress controller instance internal to our cluster, meant we could host a self signed certificate on the nginx controller and move the LB traffic over to 443/HTTPS. All subsequent traffic from the nginx controller to our services is then encrypted with mTLS via Linkerd.

Therefore, the flow for a request from UNA is as follows:

HTTPSconnection to our public loadbalancer, thetlsconnection is terminated here and returns to the user theuna-arcticshores.comcertificate.- A new

HTTPSconnection made down to thenginxingress instance within the cluster, the LB receives the self signed certificate but doesn’t verify. nginxroutes the traffic to the correct service,linkerdsteps in and encrypts all traffic between the kubernetes services usingmTLS- Calls from the corresponding service to relevant databases are made via

HTTPS

Footnote: We chose to use HTTPS/TLS to encrypt the last mile (load balancers -> cluster). However, TLS is typically designed to encrypt traffic between servers and unknown clients, given that the clients within our private network known are known to the load balancer, a more secure solution would be to use mTLS, as we do for the inter-cluster traffic via linkerd, to encrypt the internal load balancer traffic. mTLS is now natively supported by ALBs, so it may be worth considering: https://aws.amazon.com/blogs/networking-and-content-delivery/introducing-mtls-for-application-load-balancer. (Note: A self-signed certificate would not work in this case; you would need to manage the certificates via the AWS Trust Store.)

Conclusion

Follow the Zero-Trust model and always operate on the premise your private network is just as exposed as your public network, harden your internal resources with the same rigor you would to your DMZ.

Assess the data your are processing and understand the regulatations governing its handling. Read between the lines to see whether or not your data mandates tls offloading or encryption for the entire journey, holistically understand where your data is going and understand the security requirements it should adhere to.

While AWS does a great job of virtualizing and abstracting the classic networking concepts from on-prem data centres it’s still important to know where your data actually is and where it is going.

We always ensure our data is encrypted at rest despite the safety and assurances we get from AWS, so it makes sense to ensure it’s fully encrypted while in transit too.

We’d also certainly recommend Linkerd as a service mesh, it has many features and advantages not covered in this blog post, but the out-of-the-box mTLS encryption of traffic is an easy win to improve your security posture.